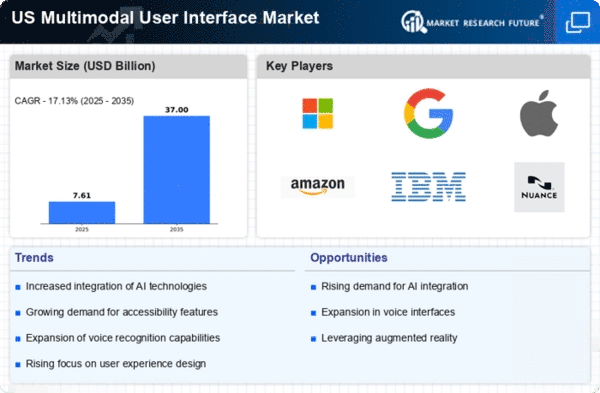

Growth of Smart Devices and IoT

The proliferation of smart devices and the Internet of Things (IoT) is a key driver of the multimodal ui market. As more devices become interconnected, the need for interfaces that can seamlessly integrate various modalities becomes paramount. This trend is particularly evident in smart homes, where users interact with multiple devices through voice, touch, and visual inputs. According to industry reports, the number of connected IoT devices is projected to exceed 75 billion by 2025, creating a vast ecosystem for multimodal interactions. This growth presents opportunities for developers to create innovative interfaces that enhance user experience across different platforms. The multimodal ui market is likely to benefit from this trend, as consumers seek cohesive and intuitive interactions with their smart devices, ultimately driving demand for advanced multimodal solutions.

Increased Focus on Data Security

As concerns over data privacy and security intensify, the multimodal ui market is witnessing a shift towards more secure interfaces. Users are increasingly aware of the risks associated with data breaches and are demanding solutions that prioritize their privacy. This trend is prompting companies to implement robust security measures within their multimodal interfaces, ensuring that user data is protected across various modalities. Recent surveys indicate that 70% of consumers are more likely to engage with brands that demonstrate a commitment to data security. Consequently, businesses are investing in technologies that enhance the security of their multimodal interactions, which may include encryption and secure authentication methods. This focus on data security not only builds trust with users but also positions companies favorably in the competitive landscape of the multimodal ui market.

Rising Demand for User-Centric Interfaces

The increasing emphasis on user experience is driving the Multimodal User Interface Market. As consumers become more accustomed to intuitive and personalized interactions, businesses are compelled to adopt interfaces that cater to diverse user preferences. This shift is evident in various sectors, including e-commerce and healthcare, where user-centric designs enhance engagement and satisfaction. According to recent data, the user experience design market is projected to grow at a CAGR of 15% through 2026, indicating a robust demand for innovative multimodal solutions. Companies that prioritize user-centric interfaces are likely to gain a competitive edge, as they can better meet the evolving expectations of their clientele. This trend underscores the importance of integrating various modalities, such as voice, touch, and visual elements, to create seamless interactions that resonate with users in the multimodal ui market.

Emergence of Augmented and Virtual Reality

The rise of augmented reality (AR) and virtual reality (VR) technologies is reshaping the multimodal ui market. These immersive technologies offer unique opportunities for creating engaging user experiences that blend physical and digital interactions. As AR and VR applications become more mainstream, businesses are exploring ways to integrate these modalities into their interfaces. The AR and VR market is projected to reach $209 billion by 2022, indicating a strong interest in these technologies. This growth suggests that companies are likely to invest in multimodal solutions that leverage AR and VR to enhance user engagement and interaction. By incorporating these immersive experiences, the multimodal ui market may see a significant transformation, as users increasingly seek out innovative and interactive ways to engage with digital content.

Advancements in Natural Language Processing

Natural Language Processing (NLP) technologies are significantly influencing the multimodal ui market. As NLP capabilities improve, they enable more sophisticated interactions between users and devices, facilitating a more natural and intuitive user experience. The integration of NLP into multimodal interfaces allows for voice commands and contextual understanding, which enhances user engagement. Recent statistics indicate that the NLP market is expected to reach $43 billion by 2025, reflecting the growing investment in technologies that support multimodal interactions. This advancement not only streamlines communication but also opens new avenues for applications in customer service, education, and entertainment. As businesses increasingly adopt NLP-driven solutions, the multimodal ui market is likely to expand, driven by the demand for more interactive and responsive user interfaces.