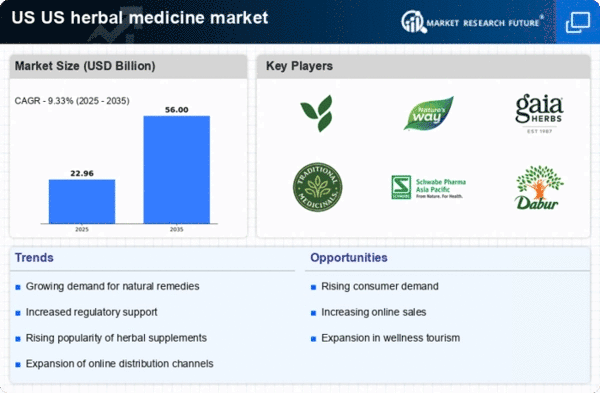

Top Industry Leaders in the US Herbal Medicine Market

Latest US Herbal Medicine Companies Update

Gaia Herbs and Nestlé Health Science announce a research collaboration to explore the potential of adaptogens for cognitive health. This partnership combines Gaia's botanical expertise with Nestlé's research capabilities, aiming to unlock the scientific evidence behind traditional herbs.

Valerian Labs, a leading botanical extracts manufacturer, acquires Bluebonnet Nutrition, a prominent natural products brand. This move strengthens Valerian Labs' position in the herbal supplement market and expands its product portfolio.

Herbalife Nutrition introduces a new line of plant-based protein powders featuring adaptogenic herbs. This demonstrates the trend of incorporating herbal ingredients into mainstream products within the health and wellness space.

List of US Herbal Medicine Key companies in the market

- Cultivator Natural Products Pvt. Ltd. (India)

- 21ST Century HealthCare, Inc. (U.S.)

- Herbalife Nutrition (U.S.)

- ZeinPharma Germany GmbH (Germany)

- Blackmores Limited (Australia)

- Himalaya Global Holdings Ltd. (India)

- Nutraceutical Corporation (U.S.)

- Emami Limited (India)

- Nature's Answer, LLC. (U.S.)

- Patanjali Ayurved Limited (India)