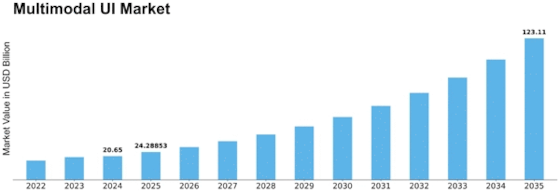

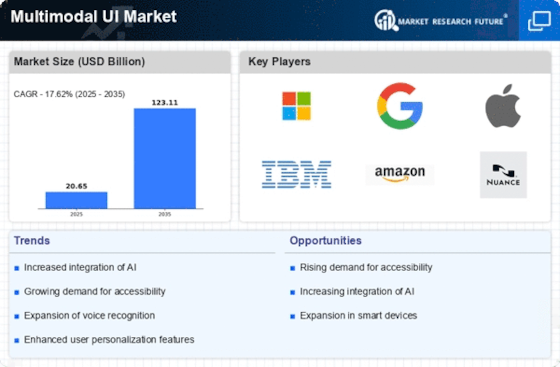

Multimodal Ui Size

Multimodal UI Market Growth Projections and Opportunities

The dynamics of Multimodal User Interface (UI) market are influenced by the rise in need of great intuitive, interactive, and user-friendly experiences across a myriad of digital platforms. A Multimodal UI merges different interactional modes including voice commands, gestures, touch and visual cues to ensure that there is better user engagement and accessibility. This ever-changing field leads in technological advancement whereby; it has an effect on various industries from smartphones and smart home devices to automotive interfaces and virtual reality applications. Several key drivers contribute to the dynamic nature of the Multimodal UI market which reflects the industry’s pursuit towards creating seamless, immersive as well as personalized user experiences. One primary driver shaping the market dynamics of Multimodal UI is the growing emphasis on natural and intuitive interactions. Classical interfaces for users such as keyboards and mice are now giving way to more diverse types of communication fitting into human conversation patterns. Natural language processing, computer vision and other technologies enabled through multimodal interfaces allow users to communicate with their devices through a combination of speech, touch or gesture among others. These changes have been necessitated by an increased requirement for not only efficient but also comfortable/user friendly user interfaces across different demographics. Additionally, the demand for superior accessibility and inclusiveness greatly influences how the Multimodal UI market behaves over time. Multimodal UI accommodates users with different abilities, preferences, or even contexts via multiple interaction modalities. For instance speaking commands could be used by physically challenged persons hence providing a barrier free interface while touching may be preferred in some cases too. It is intended that this inclusiveness modeled in use of multimodality aligns with designing technologies accessible by all categories of people including those disabled. The evolution of smart devices and Internet of Things (IoT) impacts significantly on how consumer behavior shapes up thus affecting Multimodal UI market trends. The need for user interfaces that integrate seamlessly with these devices has risen considerably with the increase of smart homes, wearables and connected vehicles. These devices can be interacted with by users through a combination of spoken commands, gestures and touch inputs as provided for by Multimodal UI. This interoperability enhances user experience across devices making it possible to have a unified digital ecosystem.

Leave a Comment