Market Analysis

In-depth Analysis of Multimodal UI Market Industry Landscape

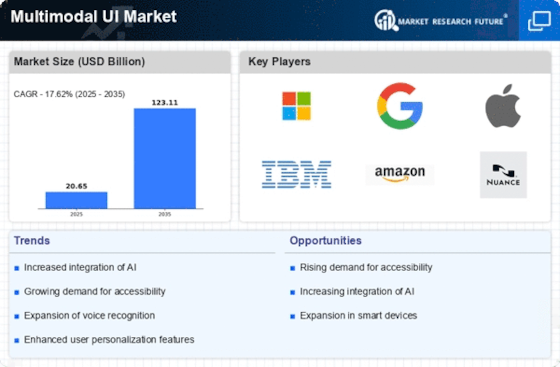

The Multimodal User Interface (UI) Market is a dynamic and influential sector shaped by various market factors that collectively contribute to its growth and impact across a wide range of applications. One of the key drivers in this market is the growing need for improved user experiences across devices and applications. Multimodal UI, characterized by its ability to integrate multiple modes of interaction, such as voice, touch, and gestures, emerges as a key enabler for intuitive and immersive user interfaces in smartphones, smart home devices, automotive systems, and other digital platforms.

Technological innovation is a cornerstone in the Multimodal UI Market. The rise of intelligent UI solutions capable of recognizing and interpreting user inputs through various modalities has been facilitated by advancements in natural language processing, computer vision, sensor technologies etc. Innovations like gesture recognition itself or voice-controlled interfaces or even touch-based interactions are thus driving businesses as well as device manufacturers towards more engaging user-friendly experiences.

Global economic conditions play a significant role in shaping the Multimodal UI Market. Economic factors can impact investment decisions made by technology companies on multimodal UI technologies. During periods of economic growth there tends to be increased funding for research & development leading to the emergence of innovative UI solutions designed for diverse applications. On the contrary, during economic recessions organizations may take conservative stance resulting into slow investment tempo within the Multimodal UI sector.

Regulatory turbulence and privacy concerns are important aspects of the Multimodal UI Market. As user experience solutions tend to include processing of client data, legal frameworks that deal with issues of data protection, information security and morality become very critical. Compliance with regulations and manifestation of responsible and privacy-sensitive practices in UI become key for multimodal UI solutions developers.

Competitive dynamics represent a trend in the Multimodal UI Market. With several firms providing solutions on multimodal UI competing for market share, differentiation factors like precision, timeliness, flexibility and integration abilities become vital considerations. The market is known for its continued innovations which cater to the needs of different industries.

Leave a Comment